OpenAI Sora

Creating video from text

OpenAI's Sora, launched on February 15, leads in AI video creation, turning text prompts into realistic and creative scenes. It sets new standards for video generation and storytelling globally.

This site gathers the latest updates and insightful discussions on Sora, serving as a premier destination for all news related to this advanced AI video model.

What’s new in Sora?

Extended Videos 60s

Sora breaks new ground by enabling the creation of AI-generated videos up to 60 seconds long, significantly extending the narrative possibilities and immersive experience for users.

Multi-Angle Shots

Sora's world model capability crafts deeply interactive and contextually rich environments, enabling a new level of creativity in video generation with complex, lifelike scenes.

World model

Sora's world model capability crafts deeply interactive and contextually rich environments, enabling a new level of creativity in video generation with complex, lifelike scenes.

Try Sora Online

Sora is not available yet. This website will be updated as soon as it’s available.

Sora Features

Video and Image Generation

Sora can produce a variety of high-definition videos and images, accommodating diverse durations, resolutions, and aspect ratios. It is adept at generating content for different devices at their native aspect ratios and can create videos up to a minute long, maintaining high fidelity.

Language Understanding and Text-to-Video Generation

Sora harnesses a sophisticated captioning model to convert brief text prompts into detailed video narratives. This model's ability to understand and generate text captions enhances the fidelity of video content, allowing Sora to create videos that closely follow user-provided textual prompts.

Image and Video Editing

Sora's versatility extends to editing tasks, where it can take existing images or videos and apply transformations based on text prompts. It enables the creation of seamless loops, animations, and extensions of videos, as well as the alteration of video settings, such as changing the environment or style, all with zero-shot learning.

Simulation Capabilities

Trained at scale, Sora demonstrates emergent properties that simulate aspects of the physical world, such as 3D consistency, object permanence, and interactions. It can generate videos with dynamic camera movements, maintain the continuity of objects over time, and even simulate simple actions that affect the environment, like painting or eating, as well as digital processes like controlling a game environment in Minecraft.

The Apex of Video Generation Models: A Leap Towards AGI!

These features collectively position Sora as a promising candidate for the development of advanced simulators, capable of replicating the complexity of the physical and digital worlds.

Sora Examples

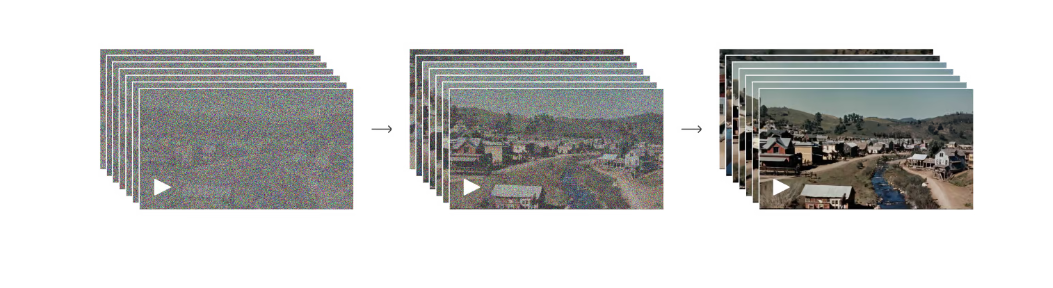

Sora's video generation capabilities are truly astonishing, delivering video sequences that are not only jaw-dropping but also remarkably stable. The model's proficiency in transforming text prompts into vivid, high-resolution narratives is a testament to its advanced understanding of visual data. The output is so impressive that it often blurs the line between generated content and real-world footage, capturing the essence of the prompt with striking accuracy and detail.

The stability of Sora's performance, particularly in generating long-duration samples, is equally impressive. Its ability to simulate complex scenarios, maintain object permanence, and interact with digital environments, such as Minecraft, showcases its potential as a powerful tool for creating immersive experiences. This level of sophistication brings us one step closer to the realm of artificial general intelligence (AGI), where machines can understand and replicate the complexity of the physical and digital worlds with unprecedented precision.

How does openai create sora?

OpenAI's Sora is like a super-smart artist that can bring your imagination to life in the form of videos. Here's how it works, in simple terms

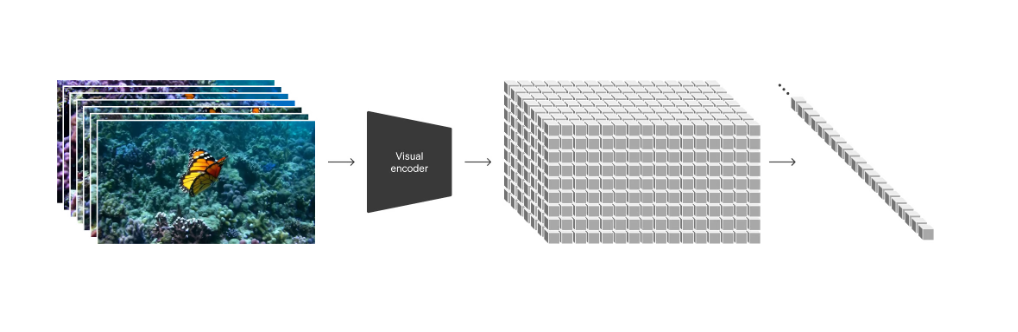

Video Compression: Imagine Sora as an artist preparing a canvas. It starts by compressing the raw video into a lower-dimensional space, which is like a smaller, more manageable canvas that still holds all the important details.

Learning to Reconstruct: Sora then learns how to reconstruct the full video from these pieces, much like an artist learning to draw by mastering each stroke.

Understanding Text: To make the video match your description, Sora understands the text prompts you provide. It can turn a brief description into detailed captions and then generate a video based on these captions.

Creating Videos: Finally, Sora acts like a director, orchestrating the reassembly of these pieces to create a new video that fits your description. It's like painting a scene on the canvas, stroke by stroke, to create a unique artwork.

Sora's technology is powerful because it can handle videos of various lengths, resolutions, and aspect ratios. Plus, it learns from text prompts to generate videos that are not only realistic but also perfectly tailored to your vision. It's like having an artist who can paint any scene you imagine and understand your thoughts to create a one-of-a-kind masterpiece.

Sora FAQs

Is Sora available for public use?

As of the information provided, Sora is a research model developed by OpenAI, and there is no explicit mention of it being open for public use. It's primarily discussed in the context of a technical report, which suggests that it might be in the experimental or development phase. For the latest updates on availability, users should check OpenAI's official channels or announcements.

What is the quality of Sora's video generation?

Sora is capable of generating high-fidelity videos, with the ability to produce content up to a minute long in high definition. It can handle diverse durations, resolutions, and aspect ratios, and its output quality improves significantly with increased training compute. The model demonstrates impressive capabilities in maintaining temporal consistency and simulating complex scenes, suggesting that the results are quite impressive.

How does Sora differ from Runway?

Runway ML is a platform that provides access to various machine learning models for creators and developers, including video generation models. It offers a user-friendly interface to experiment with AI tools. Sora, on the other hand, is a specific video generation model developed by OpenAI, known for its ability to generate high-fidelity videos from text prompts. While Runway is a platform that hosts multiple models, Sora is one of the models that could potentially be integrated into such a platform.

What is the difference between Sora and Piko?

Piko is a generative AI model that specializes in creating short, high-quality videos from text prompts. It is designed to be user-friendly and accessible, often used for creating social media content or short video clips. Sora, as described by OpenAI, is a more advanced model capable of generating longer, high-definition videos with complex scenes and dynamics. The main difference lies in the scope and capabilities of video generation, with Sora being more versatile and capable of producing longer, more detailed content.

What impact will Sora have on the future?

Sora's advanced video generation capabilities could revolutionize content creation, making it easier for creators to produce videos without the need for extensive video editing skills or resources. It could also impact industries like film, advertising, and gaming by providing tools for rapid prototyping and immersive experiences. As AI models like Sora continue to evolve, they may contribute to the development of more sophisticated AI systems, potentially leading to breakthroughs in artificial general intelligence (AGI) and the creation of more realistic digital simulations of the physical world.